Complexity of LLM deployment

Introduction

LLMs are deep learning models that understand and generate natural language text and code and they are trained on huge amount of data that require high compute power for training and inferencing.

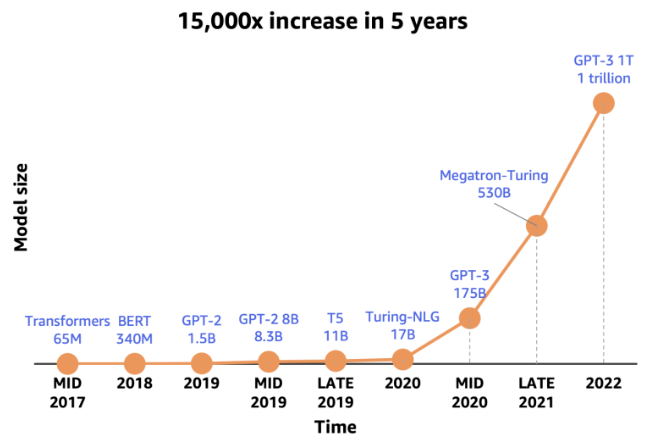

Unlike other traditional NLP models, LLMs require high computation power and larger storage requirements due to billions or trillions of trained parameters that have evolved in the past years.

The size of large NLP models is increasing | Source

So tuning and inferencing of LLMs became more costly and time consuming. And this is the reason their deployment became more complex.

In this document, we will go through the different challenges in a simplified manner in order to give an idea on what to consider when putting LLMs into production.

LLM Complexity on Deployments

- High computation resources like GPUs. (either a high power GPU like A100 and H100 or multiple GPUs)

- Large amounts of memory, and storage capacity (Large model size)

- Scaling can be difficult and costly in the face of high traffic.

- There can be tarde-offs: Cost (computation) vs accuracy , Accuracy vs latency, Cost vs throughput

- Deployment strategies, rollbacks, AB/Testing if necessary.

- Privacy and Security handling.

- Model versionning (check neptune.ai article)

- Energy consumption leads to large carbon footprint (not environment friendly)

Model Parallelization

Tensor-based parallelism or Block-wise model parallelism:

Tensors here represent: model parameters or intermediate activations.

- Model is divided horizontally into smaller chunks/blocks and each block is assigned to a processor/gpu.

- Intermediate results or activations are passed between segments.

- Used mostly when the model is too large to fit into one device.

- The inputs are passed through the blocks sequentially.

Example: Consider an LLM with 100 blocks and four GPUs. In block-wise model parallelism, the blocks could be distributed across the GPUs, with GPU 1 processing blocks 1 to 25, GPU 2 processing blocks 26 to 50, GPU 3 processing blocks 51 to 75, and GPU 4 processing blocks 76 to 100.

Token-wise model parallelism/ data parallelism:

- The model is replicated into different devices/GPUs

- This works mostly for large input data batches.

- The data is split into smaller tokens and fed to the model independently.

- Each output will then be aggregated into one.

Pipeline-based parallelism

- Splits the LLM into segments or stages, and each segment operates independently in a pipeline fashion.

- The data flows sequentially between the different segments.

- Reduces inference time by continuous and overlapping processing.

Layer-based parallelism:

- Different layers of the LLM are assigned to different devices or processors.

- Each layer/device processes the data independently.

- This way reduces the memory usage for each processor.

- The processing is done in parallel of the different layers.

Example: Consider an LLM with 12 layers and four GPUs. The layers could be divided such that GPU 1 processes layers 1 to 3, GPU 2 processes layers 4 to 6, GPU 3 processes layers 7 to 9, and GPU 4 processes layers 10 to 12.

Hybrid parallelism

- Combines multiple parallelism strategies, such as a combination of data parallelism and model parallelism or data parallelism and pipeline parallelism.

Frameworks which support this today

Tensorflow and pytorch

A widely used opensource framework that supports the optimization and acceleration of models. It supports GPUs utilization and tensors parallelism.

ONNX runtime

LLM models need to be exported in ONNX format. It provides optimization, acceleration and parallel execution.

Hugging face Transformers:

Provides tools for fine-tuning, deployment and inference of LLMs. It also provides a high level API for LLM inference and efficient batch processing.

NVIDIA triton inferencing server (with the fastertransformer custom backend)

Supports high performance deployment of deep learning models and LLMs. It also offers scalability, load balancing, model versioning.

FasterTransformer contains the implementation of the highly-optimized version of the transformer block that contains the encoder and decoder parts. Using this block, you can run the inference of both the full encoder-decoder architectures like T5, as well as encoder-only models, such as BERT, or decoder-only models, such as GPT. It is written in C++/CUDA and relies on the highly optimized cuBLAS, cuBLASLt , and cuSPARSELt libraries. This allows you to build the fastest transformer inference pipeline on GPU. There are two parts to FasterTransformer. The first is the library which is used to convert a trained Transformer model into an optimized format ready for distributed inference. The second part is the backend which is used by Triton to execute the model on multiple GPUs.

Types of GPUs

NVIDIA A100:

- high mem capacity up to 40GB per GPU.

- 64 FP32 CUDA Cores per SM, 8192 FP32 CUDA Cores per full GPU

NVIDIA H100:

- Includes a dedicated Transformer Engine to solve trillion-parameter language models

- 128 FP32 CUDA Cores per SM, 18432 FP32 CUDA Cores per full GPU

NVIDIA T4 (multi-gpu setup):

- efficient capabilities with lower power consumption compared to high end GPUs like A100.

GCP TPU V3:

- Large mem capacity up to 256 GB.

- High inference performance thanks to the mixed precision operations.

AWS Inferencia:

- Developed a custom-built deep learning inference chip designed to deliver high performance at low latency for LLM inference.

Cerebras CS2:

- Includes the Cerebras Wafer Scale Engine (WSE), which is one of the largest and most powerful chips ever created for deep learning.

- Offers parallelism, memory capacity.

- offers optimizations specifically for LLMs, such as model’s large size support, parallel, processing, high speed memory access.

LLM’s Complexity in production

Cost-intensive architecture, processing, and experimentation

It should be no surprise that models of such a massive scale are highly costly to operationalize. The computation of large language models requires extensive architecture to distribute the parameters across multiple processing engines and memory blocks. On top of that, the experimentation costs will keep adding up to the point where you will exhaust all your resources before your model makes it to production.

Issues associated with language misuse

Large language models are built using massive amounts of data from disparate sources. The problem with collecting vast heterogeneous data is the biases that stem from the data source’s culture and society. Moreover, verifying the credibility of so much information takes time and effort. When a large language model is trained on biased and potentially false data, the model amplifies the data’s discrepancies, leading to erroneous and discriminatory outcomes.

In addition to language source risks, making LLMs understand human logic and the different contexts behind the same data is very challenging. The most critical challenge is to perfectly reflect the diversity of human beliefs and opinions in large language models.

Fine-tuning for downstream tasks

Large language models are generally accurate and efficient for large-scale data. However, it can be challenging to repurpose these models for specific domains and tasks. This repurposing requires fine-tuning existing large language models to create smaller models for specific downstream tasks.Although fine-tuned large language models offer the same performance benefits as their parent model, it can take time to get them right. Details like knowing what data to use, choosing the hyperparameters, and choosing base models to tune are crucial to these models and equally hard to figure out. You need to accurately work out these details to maintain the explainability of your fine-tuned model.

Hardware Problems

Even if your enterprise has the budget for large-scale distributions, finding a suitable mode of hardware configuration and distribution is another challenge that awaits. As there is no one-size-fits-all hardware stack for LLMs, it is up to you to create an optimal hardware plan for your model. Moreover, you will also require optimized algorithms to help your computational resources adjust to your scalable LLMs.